What is GCP Shared VPC?

GCP shared VPC allows an organization to share or extend its vpc-network (you can also call it subnet) from one projects (called host) to another project (called service/tenant).

When you use Shared VPC, you designate a project as a host project and attach one or more other service projects to it. The VPC networks in the host project are called Shared VPC networks. Eligible resources from service projects can use subnets in the Shared VPC network.

Is Shared VPC a replacement of Transit (Hub-Spoke) Network?

Shared VPC is not for transit networking. It does not provide any enterprise grade routing or traffic engineering capabilities. Shared VPC lets organization administrators delegate administrative responsibilities, such as creating and managing instances, to Service Project Admins while maintaining centralized control over network resources like subnets, routes, and firewalls.

Aviatrix Transit Network Design Patterns with GCP Shared VPC

Aviatrix supports the GCP Shared VPC model and build the cloud and multi-cloud transit networking architecture to provide enterprise grade routing, service insertion, hybrid connectivity and traffic engineering for the workload VMs. There are number of different deployment model possible but we will focus on two designs with GCP Shared VPC network.

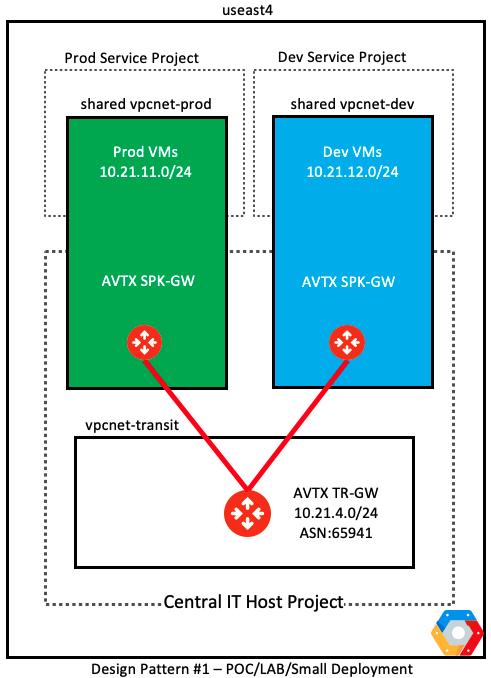

Design Pattern#1 – Aviatrix Spoke and Workload VMs in the Shared VPC Network

This is suited for small, PoC or lab deployments where the networking is kept very simple

Deployment details for this design pattern are discussed here

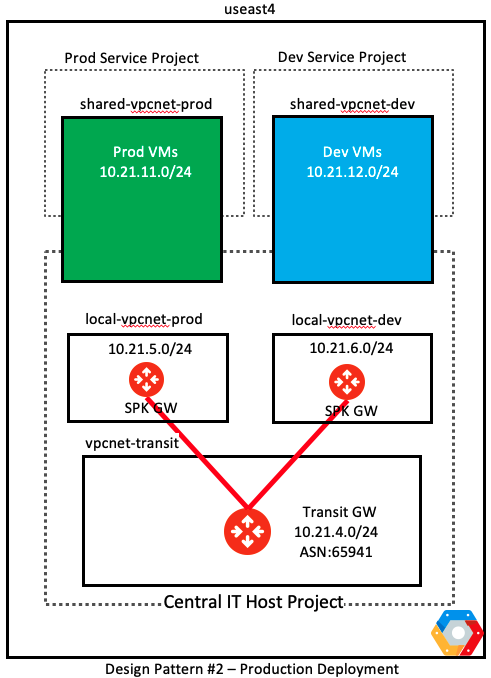

Design Pattern #2 – Aviatrix Transit in Host Project and Workload VMs in Shared VPC Network

- This is the recommended model for the enterprises

- The Aviatrix Transit and Spoke GWs are deployed inside the host-project in their respective vpc network

- These vpc networks are not shared but stay local to the host project

- The workloads vpc networks are created inside the host project and shared with the service/tenant VPCs using the GCP shared VPC network

Deployment details for this design pattern is discussed here

Comments are closed