Let’s distinguish between Large Language Models (LLMs) and Deep Learning Recommendation Models (DLRMs). Large Language Models […]

When you read the title, a few things come to mind. Q: “What does it actually […]

Q: How do neural networks learn from data? Please describe the main process or methodology used? […]

NETFLOW V9 gives you both options to use. Either IPT (IP Network Traffic) or L7 (Layer7 […]

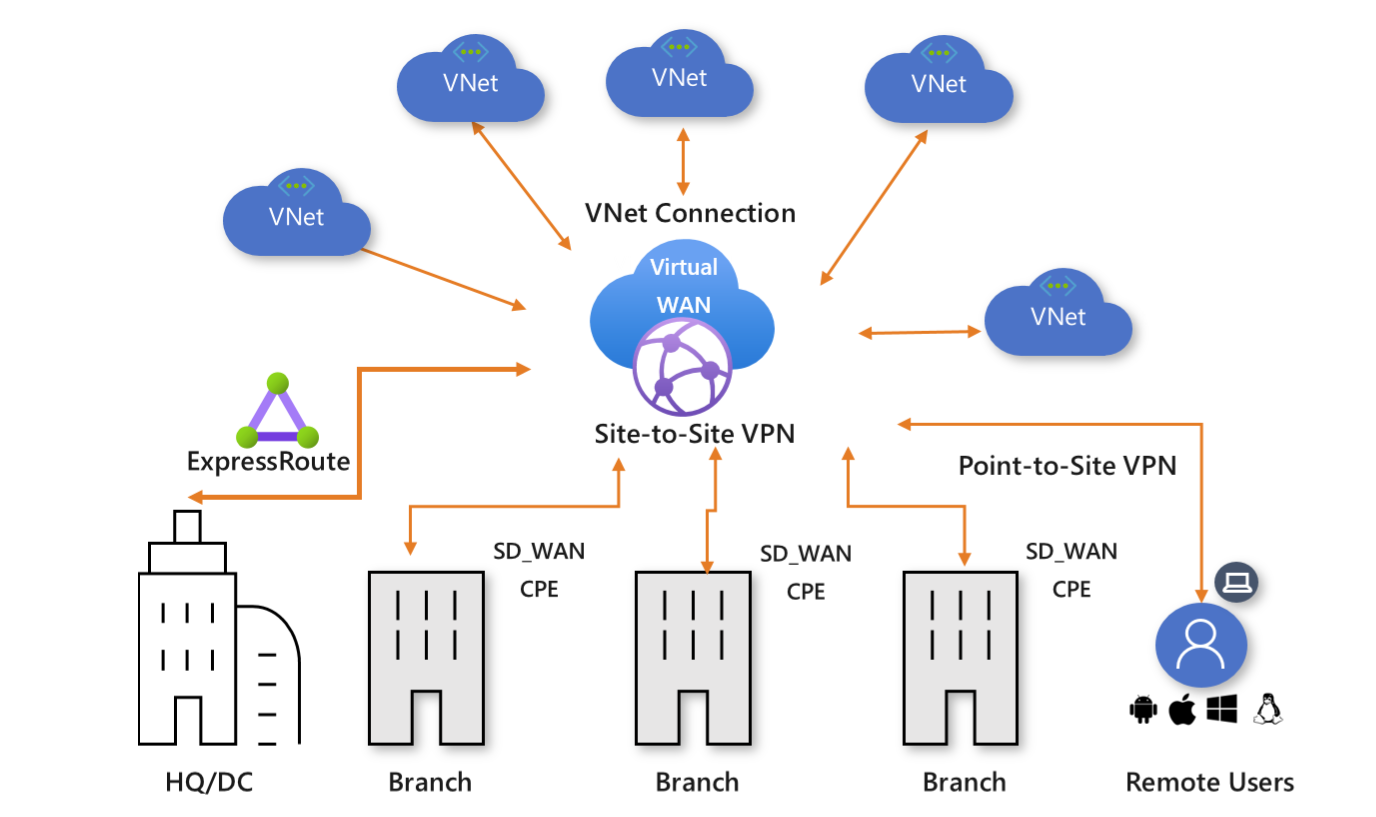

Azure Virtual WAN is a networking service that combines many networking, security, and routing functionalities to […]

Recently, I attended the RSA Conference 2024, a premier gathering showcasing the most innovative cybersecurity advancements. […]

I thought the private cloud definition was well established, but recently, an eLearning course caused some […]

Title: Aviatrix’s Distributed Cloud Firewall: Harnessing open source technologies for enhanced security Introduction: The need for […]

In my perspective, this deal holds significant promise, as I elucidated in my detailed article. One […]

It is time for network admins, engineers, architects, and technology leaders to adopt a clear path […]

Enterprise enablement and technical pre-sales are challenging job functions in any organization. A typical instructor, engineer, […]

You must have heard that CSP native networking and security services provided by CSPs (Cloud Service […]

This blog covers how AWS and AViatrix coming teogher reduce AWS NAT Gateway Cost with Improved […]

What is AWS Transit Gateway (AWS-TGW)? AWS Transit Gateway is a service that allows customers to […]

A cloud network backbone, also known as a cloud backbone, is a high-speed network infrastructure that […]

This is a five-part series of articles examining five critical mistakes organizations face when building a […]

AKS is Azure Kubernetes Service. It is a K8S service managed by Azure. https://learn.microsoft.com/en-us/azure/aks/configure-azure-cni#frequently-asked-questions Q: What […]

Blackbox NaaS vs. Aviatrix Enterprise NaaS (Shahzad) Some vendors provide a NaaS service that forces enterprise […]

The C-suite/executive audience needs to understand the importance of networking. Networiking is a broad topic and […]

AWS recently launched a new service called AWS Network Firewall (NWFW). The AWS NWFW will be […]

GCP Design with Global VPC in Transit and Spoke with same AZ spoke GW distribution GCP […]

Every public Cloud is drastically different. The networking and security are 180 degrees apart from each […]

Aviatrix Introduction <Text here> Aviatrix Business Value Joint Solution Brief Next-generation solutions using Aviatrix Secure Cloud […]

Software as a Service (SaaS), or as some call it NaaS (Network as a Service) is […]

You would assume that the Cloud Application migration should accelerate your digital transformation but it is […]